# High Availability on Entando

To build applications on Entando for high availability (HA), it is best practice to examine your goals, hardware, networking, and application-specific setup as well as optimize the App Engine deployment for that environment. The configurations and tests below can be used as building blocks to create a deployment architecture that promotes HA for your application in most situations. They include steps to set up and validate a clustered instance of the Entando App Engine, along with the configuration for Redis to support that instance.

TIP

To scale an Entando Application without the use of clustered storage assumes all instances are scheduled to a single node and requires a ReadWriteOnce (RWO) policy in conjunction with taints on other nodes. Be aware of the pros and cons of scheduling instances to the same node so you can maximize utilization of node resources and recover from an unreachable application instance. If the node terminates or is shutdown, your application will be down while Kubernetes reschedules the pods to a different node.

# Clustering

This section describes how to set up a clustered Entando App Engine in the entando-de-app image. The goal is to deploy a clustered instance of the App Engine and verify the scalable deployment and HA of the application.

# Prerequisites

- An existing deployment of an Entando App or the ability to create one.

- If you haven't created a deployment or don't have a YAML file for an Entando deployment, follow the Quickstart instructions.

- The Entando deployment must use a Relational Database Management System (RDBMS) to organize data in a table structure. Clustered instances will not work correctly with in-memory databases.

- Sticky sessions are recommended when enabling a clustered Entando Application. For example, see Manage NGINX for related affinity settings.

# Creating a Clustered App Instance

- Create an Entando deployment via the operator config file or edit an existing deployment YAML file.

- Scale your Entando server application:

kubectl scale deployment quickstart-deployment -n entando --replicas=2

- To view the pods in your deployment:

`kubectl get pods -n YOUR-NAMESPACE`

You should have two

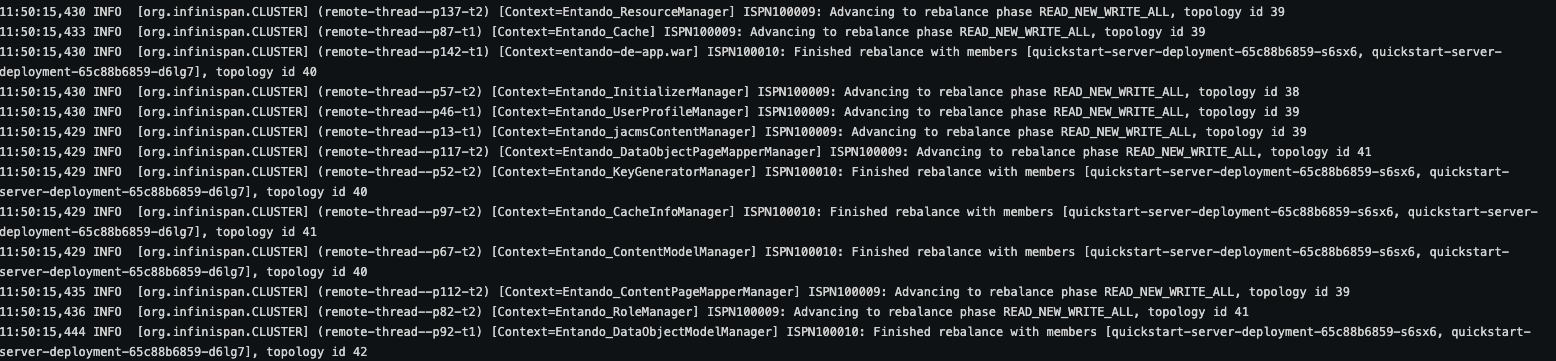

quickstart-deploymentpods in your namespace.Look in the logs of the

quickstart-deploymentin either pod to see logging information related to different instances joining the cluster and balancing the data between the instances. See the screenshot for an example. Your actual logs will vary.

# Validating Clustered Instances

This is an advanced exercise and not required or recommended for most deployment scenarios. The steps below validate that the clustered instances are working in your environment and that you have created a high availability deployment.

- Complete the creating a clustered instance tutorial above or have an existing clustered Entando App instance available for testing.

- Retrieve the URL for your

entando-de-app:

kubectl get ingress -n YOUR-NAMESPACE

- Open the URL in a browser and ensure that the application is working.

- Open a new browser window in incognito or private browsing mode to ensure that no data is cached and you're receiving a copy of the running application. Do not navigate to the app.

- Delete one of the server deployment pods in your clustered instance:

kubectl delete YOUR-POD-NAME -n YOUR-NAMESPACE

- There are other ways to do this. You could also shell into the server-container and manually kill the running app process with

kill -9 357. - If you want to test at the hardware level, you could manually terminate a node in your cluster (ensuring that the pods are scheduled to different nodes).

- In your private/incognito browser window, open the URL to your

entando-de-app. - Check that the application continues to render while the pod you deleted is no longer present.

- Wait for Kubernetes to restore your deleted pod.

- Check that the application continues to render after the pod is restored.

# Caching Validation

Validating the shared cache can be done in a process similar to the clustered instance validation. The high-level steps are:

- Deploy a clustered instance (see creating a clustered instance tutorial).

- Create data with the App Builder (pages, page templates, content etc.), using the external route for the application.

- Refer to the logs to note which instance processed the request.

- Terminate that instance.

- Fetch the recently created data and verify that the data are returned.

# Configuring and Deploying with Redis

In this section, an Entando App Engine instance is deployed using Redis as a cache for data served by the App Engine. For more information on the cache configuration for the App Engine, see high availability in an Entando Application.

# Deploy Redis to Kubernetes

- Create the Redis deployment and expose the endpoints:

kubectl create deployment redis --image=redis:6

kubectl expose deployment redis --port=6379 --target-port=6379 -n YOUR-NAMESPACE

- Install the Redis CLI for your environment per https://redis.io/topics/rediscli (opens new window).

- Get the IP for your Redis deployment:

kubectl get service -n YOUR-NAMESPACE

- Validate your deployment:

redis-cli -h 10.43.99.198 -p 6379 ping

- Should respond PONG.

redis-cli -h 10.43.99.198 -p 6379 incr mycounter

- Should increment each time.

# Configure the Implementation

- Download the

entando-app.yamltemplate:

curl -sLO "https://raw.githubusercontent.com/entando/entando-releases/v7.1.6/dist/ge-1-1-6/samples/entando-app.yaml"- Add these environment variables to the

EntandoAppYAML to enable Redis for cache management. The variables to create areREDIS_ACTIVE,REDIS_ADDRESS(e.g. redis://localhost:6379), andREDIS_PASSWORD.

data:

environmentVariables:

- name: REDIS_ACTIVE

value: "true"

- name: REDIS_ADDRESS

value: YOUR-REDIS-URL

- name: REDIS_PASSWORD

valueFrom:

secretKeyRef:

key: password

name: YOUR-REDIS-SECRET-NAME

optional: false

NOTE: This example uses a Secret for the

REDIS_PASSWORD, which is recommended. You can also hardcode the password in the YAML for testing purposes, but the use of clear text passwords in deployment files is not recommended. Create and use a Secret for the password as a best practice.

- Deploy your file

kubectl apply -f entando-app.yaml

You now have a high availability cluster of Entando with Redis implementation.